Open Letter to Faculty on Wake Forest's New Admissions Policy With Annotated Bibliography

By Joseph A. Soares

Associate Professor, Department of Sociology

Wake Forest University (soaresja@wfu.edu)

Universities and colleges are communities that extend beyond faculty, administrators, staff, students and alumni to our families and friends. The quality of our intergenerational compact is annually refreshed by our admissions policies and practices. When we select our students, we are selecting the face of Wake Forest for today and tomorrow.

Wake Forest has taken the bold step of becoming the first highly ranked national university to select its students with criteria that move us beyond the pitfalls of standardized tests. Like all very selective colleges and universities in the United States, Wake Forest has used the SAT as a key criterion in our admissions’ decisions for quite some time. We have always striven to select an academically excellent, multi-talented, and diverse undergraduate body. Yet, as we have become more competitive within the top tier of America’s best universities, we have become increasingly mindful of the shortcomings of SAT driven admissions. We have become convinced that the SAT is a weak measure of academic ability, and when we rely on it we pay a price. By misevaluating our students’ academic strengths, we undercut our diversity and talent pools.

Events at the University of California between 2001 and 2005 made us aware of the inadequacies of the SAT. California was the last highly ranked national university, before Wake Forest, to distance itself from the SAT. Thanks to the University of California the old SAT with its verbal analogies was discarded by the ETS as of March 2005; California voted to discontinue using the SAT as of that date, and rather than lose its biggest customer, the ETS discarded the old version and created a new test.

California arrived at the decision to drop the old SAT through a reexamination of its admissions criteria that was forced on it by the elimination of affirmative action. Race and gender were excluded as valid concerns for admissions by a vote of the Regents of the University of California in 1995, and then by the electorate passing Proposition 209 in 1996. After 1996, the university looked for alternative ways to sustain its diversity by reexamining its remaining admissions tools.

California’s President, Richard Atkinson, asked researchers on its Board of Admissions and Relations with Schools (BOARS) to reevaluate California’s criteria for selecting undergraduates. BOARS’s statisticians, Saul Geiser and Roger Studley, discovered that the SAT did not perform as advertised. It did not strongly predict college grades.

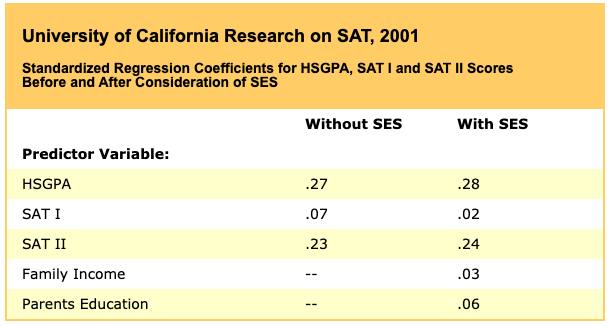

Geiser and Studley investigated the role of the SAT in admissions by examining four entering cohorts, with an approximate total of 78 thousand undergraduates, matriculating at California from 1996 to 1999. They found that high school grades (HSGPA) and subject specific tests, such as the SAT II, strongly predicted college grades, but not the SAT. In two of the four years they researched, the SAT was not a statistically significant variable in their multivariate regression models for predicting grades. For the two years it did show a weak correlation, the SAT’s contribution looked more spurious than substantive. The SAT seemed to matter most when one did not include information on family income and parents’ education. Once socioeconomic status (SES) variables were made part of the regression model, the SAT contributed nothing of value to BOARS’ ability to predict grades for any of the cohorts under scrutiny. However, the inclusion of SES variables in the model did not have the same nullifying impact on HSGPA or subject specific tests, such as the SAT II; these continued to be statistically significant predictors of college grades even after one inserted family SES variables in the model. SES differences do not reduce HSGPA or subject test, SATII, effects, but they do reduce SATI effects to an extent not worth noticing. The following table reproduces the regression model results of Geiser and Studley’s 2001 study.

As Geiser explained in correspondence with me, “In this first plot, each 100-point increase in SAT II scores adds .18 of a grade point to predicted freshman GPA, whereas a 100-point increase in SAT I scores adds only about .05 of a grade point.

“The second conditional effect plot then adds family income and parents’ education into the regression model. Here the predictive superiority of the SAT II is even stronger. Controlling for SES, HSGPA, and SAT I scores, each 100-point increase in SAT II scores adds .19 of a grade point to predicted freshman GPA. In contrast, controlling for SES, HSGPA and SAT II scores, each 100-point increase in SAT I scores now adds only .02 of a grade point.”

In 2007, Saul Geiser and Maria Veronica Santelices, revisited the question of the SAT, HSGPA, SES and academic outcomes. They noted that, “SAT scores exhibit a strong, positive relationship with measures of socioeconomic status … whereas HSGPA is only weakly associated with such measures”(2007: 2). When one statistically controls for SES, the weak contribution of the SAT in a regression model drops down to near zero, while the statistical significance of HSGPA and subject achievement tests goes up. The cost of adding the SAT to the model is to stack the odds against under-privileged youths.

In sum, California found that the SAT correlated with family income more powerfully than with college grades. California showed that high-school grades were a better predictor of college performance than the SAT I, and that the correlation of HSGPA with college grades grew over the course of the undergraduate’s education; HSGPA was an even stronger predictor of students’ college GPAs in their senior year than it was of their freshman grades.

The news was shocking and led the Faculty Senate to request background information on California’s relation to the SAT. Archival research into the history of admissions, in particular by John Aubrey Douglas of Berkeley’s Center for the Study of Higher Education (see his book, The Conditions for Admission, Stanford Press, 2007), found that BOARS had repeatedly in the past rejected the SAT as a valid measure of academic performance. Research conducted by BOARS again and again, in 1955, 1958, 1962, and 1964, showed the SAT contributed nothing to California’s ability to predict the academic performance of applicants. California adopted the SAT in 1968 for the wrong reasons: not to predict academic performance, but to show that it could compete with Harvard and other high prestige universities — and to ease the bureaucratic exclusion of baby-boomer youths in a seemingly ever-rising applicant pool.

Other universities were also searching for alternative admissions’ strategies. The University of Texas adopted in 1997 to very good effect a 10% solution; it admits everyone in the top 10% of a Texan high-school-senior class without any consideration of the SAT. Social scientists have drawn on the Texan experiment to establish that if we set aside the SAT, and rely on high school grades and class rank instead, we can get academically excellent and diverse students. The only “academic measure” that stands in the way of us getting racial minorities and lows SES white youths with high academic ability, is the SAT.

Large state universities are not the only institutions investigating this issue. Research on the role of standardized tests at elite private colleges has arrived at similar conclusions to those drawn by statisticians at California and Texas. My book, The Power of Privilege: Yale and America’s Elite Colleges, Stanford University Press, 2007, examines admissions from the perspective of top-tier private institutions. Recently, the Chronicle of Higher Education published a review essay on four new books on college admissions, stating that, “Of all the authors, Soares is the best at explaining the statistical applications of the numerical measures used in the admissions process and why a student’s ACT or SAT scores are not good predictors of his or her freshman GPA at the most competitive colleges.”

The moral of this story is clear. The SAT is not a good predictor of academic performance, but it has been a reliable indicator of family income. For too long, universities have used the SAT to keep up with the Joneses, and in the process, due to the SAT’s correlation with family income, we have ended up with more and more privileged undergraduates.

Provost Jill Tiefenthaler’s letter announcing Wake Forest’s new policy reviewed the positive experiences of a handful of top liberal arts colleges with SAT-optional admissions for a number of years now. It is worth underscoring that Bates College found no meaningful differences in the college grades or graduation rates of undergraduates admitted without or with SAT scores, while Hamilton found non-SAT submitting students had higher GPAs than their SAT-score-submitting peers. All SAT-optional top liberal arts colleges have experience a jump up in their application pool size, and have been able to increase their numbers of racial minorities and low SES students.

Making the SAT optional is a win-win situation for us. It allows us to tell the truth about the SAT: that it is not the gold standard for predicting college performance — insofar as any academic measure does that, it is HSGPA. And SAT “not required” admissions will give us greater social diversity and academically stronger students.

College admissions have never been, and likely never will be, exclusively determined by academic factors; many other valid considerations, from social diversity to special talents, enter into our decision. The academic variables we do use, however, should be real measures of intellectual performance. Wake Forest is committed to looking at the whole student, beyond unreliable test scores, and our new policy empowers us to do that.

In the following, I will identify and sometimes comment on relevant items that can be found on the web or through a library search.

- An article from Inside Higher Ed that discusses the momentum among liberal arts colleges toward SAT-optional admissions.

http://insidehighered.com/news/2006/05/26/sat - This is a Bates College press release and text of a talk on its experience with SAT-optional admissions.

http://www.bates.edu/ip-optional-testing-20years.xml - As the title suggests, this item from FairTests lists the more than 750 colleges and universities who have already made the SAT optional.

http://www.fairtest.org/univ/optional.htm - My book, The Power of Privilege: Yale and America’s Elite Colleges, Stanford, 2007, chronicles the history of elite college admissions in the US and looks at the effects of standardized tests and the search for leaders.

For a partial list of reviews, see:

http://www.sup.org/book.cgi?book_id=5637%205638 - This is a historical background report by researchers at the University of California on the “use of admissions tests by the University of California.” The report narrates the stages of California’s experience and recommends in favor of creating a new test to replace the SAT.

For the full report, see:

http://www.ucop.edu/news/sat/boars.pdf - This is the statistical report by Saul Geiser and Roger Studley that directly led to California’s decision against the SAT I.

http://www.ucop.edu/sas/research/researchandplanning/pdf/sat_study.pdf - This Center for the Study of Higher Education (CSHE) report, “Validity of High-School Grades in Predicting Student Success Beyond the Freshman Year: High-School Record vs. Standardized Tests as Indicators of Four-Year College Outcomes,” by Saul Geiser and Maria Veronica Santelices, 2007, turns the conventional wisdom on its head. They found it was a myth that high school grades were unreliable, inflated, and less valuable than SAT scores in predicting college grades.

Using California data, tracking the educational careers of approximately 80 thousand students, they found that high-school grades were more reliable than SAT scores in predicting college grades; and that the power of HSGPA to predict grades grew as the students’ career moved forward. HSGPA predicts senior year grades even more strongly than it predicts freshman grades. They wrote, “HSGPA is the strongest predictor of cumulative fourth-year college grades at all UC campuses” (2007: 11). The same could be said if one broke the data down by subject field; for each undergraduate major, “HSGPA stands out as the strongest predictor of cumulative college grades in all major academic fields” (2007: 12). HSGPA is our best single academic measure of academic performance.

HSGPA is not as compromised by family SES as are SAT scores. In terms of raw correlations, the SAT verbal score correlated with family income at the .32 level, which is to say moderately well, while HSGPA correlated with family income at the .04 level, which is to say not at all.

On the related consequences for SES and racial diversity, they found, “SAT I verbal and math scores exhibit a strong, positive relationship with measures of socioeconomic status (SES) such as family income, parents’ education and the academic ranking of a student’s high school, whereas HSGPA is only weakly associated with such measures. As a result, standardized admissions tests tend to have greater adverse impact than HSGPA on underrepresented minority students, who come disproportionately from disadvantaged backgrounds” (2007: 2). If one divided minority students by SAT score and by HSGPA, while only 4% of minority students at California had SAT scores in the top 10%, approximately 9% of minority students had a HSGPA score in the top 10% of all high school students (2007: 2). If one works with HSGPA rather than SAT, then more than twice as many minority students would be eligible for very selective university admission.

For the full report see:

http://cshe.berkeley.edu/publications/publications.php?a=9 - Saul Geiser drew our attention to an article that evaluates the impact of SES on the predictive validity of SAT I scores see: Journal of Econometrics (vol. 21, issue 1-2, pp. 297-317) Jesse Rothstein, a Princeton economist, “College Performance Predictions and the SAT,” concludes that: “[A] conservative estimate is that traditional methods and sparse models [i.e., those that omit SES] overstate the SAT’s importance to predictive accuracy by 150 percent.”

http://www.princeton.edu/~jrothst/published/SAT_MAY03_updated.pdf - This June 2004 CSHE report, “Learning and Academic Engagement in the Multiversity,” on academic engagement and disengagement is relevant to our concerns. It found the SAT does predict something about college: one’s likelihood to spend more time in social activities than in academic ones. The report says, “academic engagement is inversely related to scores on the SAT verbal test. Because of the negative relationship between family income and academic engagement, and because of the strong positive correlation between SAT scores and family income, it turns out that SAT scores … are positively related to measures of social engagement and academic irresponsibility and negatively related to measure of academic engagement” (2004: 34). In sum, students with high SAT scores are less involved with intellectual life on campus than their lower scoring classmates.

For the full report, see:

http://cshe.berkeley.edu/publications/publications.php?id=73 - This August 2007 article, in the top journal in my field, American Sociological Review, by Sigal Alon, Tel Aviv University, and Marta Tienda, Princeton University, “Diversity, Opportunity, and the Shifting Meritocracy in Higher Education,” 72, 4: 487-511, makes the case that one can get both academic excellence and social diversity, if one relies on HSGPA and class rank but not if one uses SAT scores.

Their work covers from the 1980s to the present, using four national data sets and evidence from the University of Texas system.

They find that since 1980, elite colleges have become more competitive and reliant on the SAT with the social effect of raising the SES composition of elite colleges. Affirmative action programs have been used to redress racial imbalances, but with controversial effects: the courts have not been favorable, opponents argue that the programs do not always work and minorities admitted under them may be stigmatized as being academically unprepared. The stigma is undeserved and this research suggests that affirmative action programs are not a necessary means to achieve diversity.

As their abstract states, “Statistical simulations that equalize, hold constant, or exclude test scores or class rank from the admission decision illustrate that reliance on performance-based criteria [rather than affirmative action criteria] is highly compatible with achieving institutional diversity and does not lower graduation rates. Evidence from a natural experiment in Texas after the implementation of the “top 10 percent” law supports this conclusion. The apparent tension between merit and diversity exists only when merit is narrowly defined by test scores.”

For the entire article, see:

http://www.asanet.org/galleries/default-file/Aug07ASRFeature.pdf - The story about, and findings, of an ETS whistle blower, Roy Freedle, are important to know. He exposed the racial and SES biases hidden in the questions used for the verbal analogies sections of the SAT. There is the Atlantic Monthly article on Freedle.

For the article, see:

http://www.theatlantic.com/doc/200311/mathewsAnd Freedle’s article in the Harvard Educational Review exposing the social biases of the SAT’s verbal analogies.

For the article, see:

http://www.hepg.org/her/abstract/23As explained in my book,

“Freedle retired in 1998 from 30 years of research at the ETS on language and tests. Freedle was uniquely positioned to tell us about the mechanics of racial and SES correlations with particular types of verbal questions. He published a summary of his findings in the Harvard Educational Review.

Freedle and others have found that racial identity matters most for verbal analogies and antonyms and least for reading comprehension questions; and that there are statistically significant differences in the performance of Whites and Blacks depending on whether the analogies and antonyms are “hard” or “easy.” Researchers at the ETS rate verbal analogies as being hard or easy in degree of difficulty. The same points, however, are awarded for getting a hard one right as for getting an easy one.

Freedle provides us with the unexpected finding that Blacks do best on the hard analogies and least well on the easy analogies; conversely, Whites do better than Blacks on easy verbal analogies, and less well on hard ones.

Why do Blacks do their best on the hard questions? Freedle is confident that a “culturally based interpretation helps explain why African American examinees (and other minorities) often do better on hard verbal items but do worse than matched-ability Whites on many easy items.” Freedle writes, “easy analogy items tend to contain high-frequency vocabulary words [such as ‘canoe’] while hard analogy items tend to contain low-frequency vocabulary words,” such as “intractable.” Easy analogies are everyday conversation terms, while hard analogy terms “are likely to occur in school-related contexts.” The more everyday a verbal analogy is, the more likely it is to transmit racial differences. Hard analogies, employing rare and precisely defined terms, more often learned in school than acquired in the home or neighborhood, do not communicate as much bias as easy ones. Blacks who take the SAT are drawing more effectively than Whites on average from their formal schooling, but they lack the cultural knowledge that White youths have as contextual background, enabling them to do better than Blacks on the easy questions. Freedle shows us that if one relied only on hard verbal analogies, the scores of Black youths would improve by as much as 300 points.

The ETS disputes Freedle’s findings, claiming his evidence is thin. And they have threatened legal action if he has in retirement any SAT data from the ETS to thicken his analyses. In effect, the ETS told Freedle, you cannot prove this, and if you have the data to attempt a proof, we will sue you. They would like him to be silenced and ignored” (Soares 2007: 159-160).

- This CSHE March 2008 report, “Does Diversity Matter in the Educational Process? An Exploration of Student Interactions by Wealth, Religion, Politics, Race, Ethnicity and Immigrant Status at the University of California,” shows that student diversity is key to getting students to think about things from a social perspective different from their own. This is a very exciting report because it is the first to document and prove, statistically, the educational benefits of diversity. The US Supreme Court, and others, have asserted or assumed the cognitive advantages of diversity; this is the first study to quantify those benefits.

For example, it is not enough to have race covered in the curriculum; students learn as much, if not more, from interactions across racial groups outside the class than from discussions inside the classroom. Perversely, an SAT based admission policy depresses our social diversity as well as the cognitive stimulation of our student body. Our education of white and black students about social differences, for example, is harmed by the social homogeneity that flows from very selective SAT admissions.

See the 2008 Steve Chatman report:

http://cshe.berkeley.edu/publications/publications.php?a=21 - This study was done by Wake Forest faculty and graduate students on race, SAT scores, Wake Forest GPA and class rank. It found that Wake Forest’s black students on average scored lower on the SAT I than whites, but that there were no GPA or class rank effects by race. In sum, black students with SAT scores lower than whites, did as well academically, which strongly suggests that there is a racial bias to the SAT. See: Lawlor, S., Richman, S. & Richman, C.L. (1997). The validity of using the SAT as a criterion for Black and White students’ admission to college. College Student Journal, 31, 507-15.

- University of Georgia study on the predictive validity of the new SAT, see:

http://www.terry.uga.edu/~mustard/New%20SAT.pdfProfessor David Mustard, one of the authors of this study, informed me in an e-mail, that the new SAT adds only one percentage point of predictive power to the explanation of college grade variance over what we know using HSGPA, AP grades, and SES.

- Saul Geiser’s 2008 paper defending the use of achievement tests but opposing the use of aptitude tests like the SAT I. In “Back to Basics: In defense of achievement (and achievement tests) in college admissions,” Geiser tells us, “The SAT is a relatively poor predictor of student performance; … As an admissions criterion, the SAT has more adverse impact on poor and minority applicants than high-school grades, class rank and other measures of academic achievement. … Irrespective of the quality or type of school attended, high-school GPA proved the best predictor not only of freshman grades … but also long-term college outcomes such as cumulative grade-point average and four-year graduation.” Geiser favors admissions practices that emphasize HSGPA, class rank, and subject “achievement” tests, and that discount or drop SAT scores, as do I.

For the full report, see:

http://cshe.berkeley.edu/publications/publications.php?id=313

Categories: Enrollment & Financial Aid

Wake Forest News

336.758.5237

media@wfu.edu

Meet the News Team

Headlines

Wake Forest in the News

Wake Forest regularly appears in media outlets around the world.